Attention Is All You Need

This is the research paper from Google that can be said to have started the modern AI revolution with their ‘transformer’ technology.

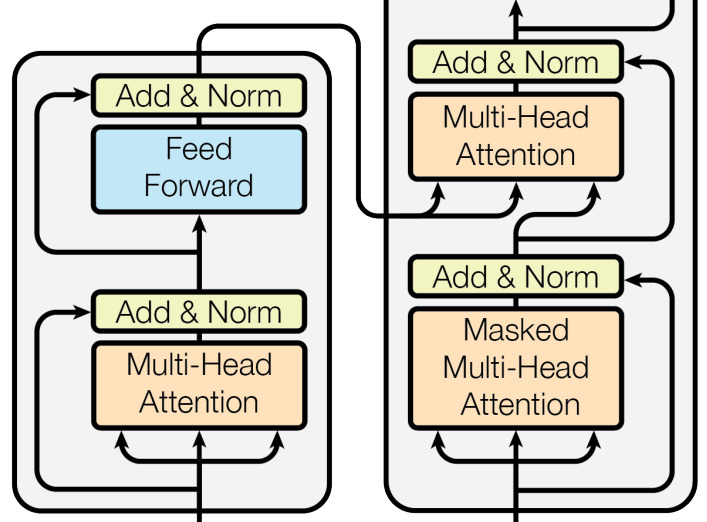

The article discusses the use of gated recurrent neural networks (GRNNs) for sequence modeling, particularly in the context of language translation. The authors present several variations on the Transformer architecture and evaluate their performance on English-German translation tasks. They also highlight the contributions of various individuals to the research, including Llion, Lukasz, and Aidan.